We tend to use the human as the yardstick to measure things. Heidegger once bluntly said that was the epiphany of modernity: “[t]hat period we call modern…is defined by the fact that man becomes the center and measure of beings. Man is what lies at the bottom of all beings, that is, in modern terms, at the bottom of all objectification and representability.”3 Media theorist Eugene Thacker further debunks the myth of life and its seemingly indisputable authenticity of human incarnation, “Life is projected from subject to object, self to world, and human to nonhuman.” 4

We inflict a prognosis of human intelligence onto machines, and therefore render Artificial Intelligence a vision both sublimely grandiose and abjectly undeliverable. We are aware that science has yet to solve the riddleof human intelligence, nor has understood the inner workings of the human brain. As professor of AI and Cognitive Science Brian Cantwell Smith contests: “For one thing, it would be premature to assume that what matters for our brain’s epistemic power is our general neural configuration. That is all that current[AI] architectures mimic.” 5 Philosopher John Searle has argued that “any attempt literally to create intentionality artificially (strong AI) could not succeed just by designing programs but would have to duplicate the causal powers of the human brain.” 6

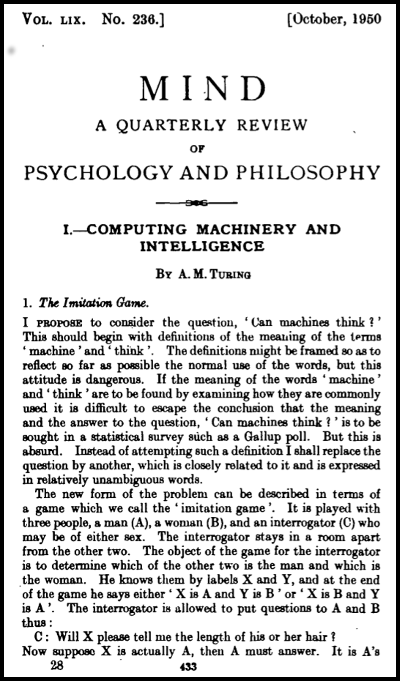

Yet, perhaps the forerunners of modern computing machines might have had something else in mind when they thought about machine intelligence in the image of a thinking machine. When answering the question “Can Machines Think?” Alan Turing in his 1950 essay “Computing Machinery and Intelligence” proposed his infamous Imitation Game (aka TheTuring Test) as a counterargument to his own self-imposed question, writing “The original question, ‘Can machines think?’ I believe to be too meaningless to deserve discussion.” Turing said instead “that in about fifty years' time it will be possible, to program computers, with a storage capacity of about 109 (10 to the 9th power), to make them play the imitation game so well that an average interrogator will not have more than 70 per cent chance of making the right identification after five minutes of questioning.” 7 The computer sure will have enough computing power to assume the task of masquerading as having intelligence. But maybe an intelligence of its own as Daniel Dennett speculated in his text “Can Machines Think”. He wrote: “Turing was not coming to the view (although it is easy to think how one might think he is) that to think is just like to think like a human being, -any more than he was committing to himself to the view that for a man to think, he must think exactly like a man. Men and women, and computers, may all have different ways of thinking. But surely, he thought, if one can think in one’s own peculiar style well enough to imitate a thinking man or woman, one can think well, indeed.” 8

In 1956 the Dartmouth “Summer Research Project on Artificial Intelligence” conference had already foregrounded and prescribed the sounding jargons of today’s AI industry, although the very term Artificial Intelligence could have been written otherwise. John McCarthy, one of the AI progenitors attributed the use of the term to “escaping associations with ‘cybernetics’,” because of either his cynicism or begrudging feelings toward Nobert Wiener, founder of cybernetics, according to Nils Nilsson’s account.9 Marvin Minsky, then a junior fellow at Harvard University proposed to design such a feedback-loop apparatus: “the machine is provided with input and output channels and an internal means of providing varied output responses to inputs in such a way that the machine maybe “trained” by a “trial and error” process to acquire one of a range of input-output functions. Such a machine, when placed in an appropriate environment and given a criterion of “success” or “failure” can be trained to establish “goal-seeking” behaviour.” 10 The celebrated inventor of information theory Claude Shannon presented a research on “Application of information theory concepts to computing machines and brain models,” specifically, “brain model to automata,” while “Originality in Machine Performance” and “The Machine with Randomness” were research topics suggested by Nathaniel Rochester, another founder of AI research. If AI was not, as some cultural critics chided, “a marketing schtick” (Florian Cramer), it certainly wasn’t so much of artificial intelligence per se, but anything along the line of digital computing technology in general, machine learning, neural networks, data mining, statistical science and cognitive science conglomerated. All of which was laid out in the early 50s except it had yet to wait for the computing speed, storage capacity and new input-output equipment to realize a vision in which “There is considerable promise that systems can be built in the relatively near future which will imitate considerable portions of the activity of the brain and the nervous system.” 11

The later story became a familiar one: The highly anticipated triumph of Good Old Fashioned AI (GOFAI) in the 60s and 70s did not deliver what was promised as aptly critiqued by Smith: “GOFAI’s ontological presumptiveness, its blindness to the subtleties of registration, and its inadequate appreciation of the world’s richness are the primary reason, for its dismal record on commonsense.”12 PhilosopherHubert L. Dreyfus’ infamous 1965 RAND Corporation report “Alchemy and Artificial Intelligence” on the state of AI already foreshadows the demise of the promise of first wave AI. He famously writes:

Early successes in programming digital computers to exhibit simple forms of intelligent behaviour, coupled with the belief that intelligent activities differ only in their degree of complexity, have led to the conviction that the information processing underlying any cognitive performance can be formulated in a program and thus simulated on a digital computer. Attempts to simulate cognitive processes on computers have, however, run into greater difficulties than anticipated.13

AI was coldly greeted by the first so-called “AI Winter” in the 1980swhen government research funding plummeted, business support pulled out, and university research into AI stagnated. With the advent and the commercialization of the internet in the 90s and the thriving of social media platforms came a slow revival of AI research and the acceleration of development since the 2010sonward. The new generation is fascinatingly fueled by the not-so-unfamiliar taglines such as Neural Networks, Data Mining, Machine Learning, etc. Today we seem to feel, touch, breathe the ubiquity of Artificial Intelligence not only in work and play, but also in politics and our cultural imagination. Indeed, AI is everywhere and continues to expand. But as Smith also remarks: “That thinking, intelligence, and information processing is the fundamental ideas behind form allogic and computing [in the first wave AI], that they are not just things that humans do, but also things we can build automatic machines to do also underlies the second wave AI.”14 (with author’s modification)

The most recent eye-popping AIheadline comes from TNW in January of 2021, the technology insider news outlet. It reads as follows: “A trio of researchers from the Google Brain team recently unveiled the next big thing in AI language models: a massive one trillion-parameter transformer system. For example, you can go here and talk to a "philosopher AI" language model that'll attempt to answer any question you ask it. While these incredible AI models exist at the cutting-edge of machine learning technology.” The commentator also acknowledges, “it’s important to remember that they're essentially just performing parl or tricks. These systems don't understand language, they're just fine-tuned to make it look like they do.”15

In other words, AI essentially does not understand semantics, or meaning, so to speak. It simply follows syntactic rules to make logical inference and execute output as true or false. Let us also bear in mind, as Smith reminds us in the opening page in his 2019 book on AI titled: The Promise of Artificial Intelligence, Reckoning and Judgement, stating: “Neither deep learning, nor other forms of second-wave AI, nor any proposal yet advanced for third-wave, will lead to genuine intelligence.”16

Here the pursuit of genuine intelligence tacitly means the General Artificial Intelligence comparable to that of human mind. This again returns us back to the age-old AI conundrum, which with human intelligence via a bio-chemical brain model as its logical paradigm, was and still is set up as the ultimate goal, imitating human cognition, perception, intention, while on the other hand an alternative take on the notion of intelligence which is independent of human enterprise, might well open up a more liberating frontier in AI research imagination and application. If AI (probably it then also requires another naming convention) can be viewed and measured as an autonomous and autopoietic technical reality as Gilbert Simondon had long ago advocated, or if we follow Alan Turing’s ambiguous intimation on gender and species neutrality when invoking machine intelligence, the dichotomy of us vs. AI, the imitation-induced competition and subordination between humans and AI machinery, the master / slave duality might no longer hold as a valid thesis or antithesis.

In other words, AI essentially does not understand semantics, or meaning, so to speak. It simply follows syntactic rules to make logical inference and execute output as true or false. Let us also bear in mind, as Smith reminds us in the opening page in his 2019 book on AI titled: The Promise of Artificial Intelligence, Reckoning and Judgement, stating:“ Neither deep learning, nor other forms of second-wave AI, nor any proposal yet advanced for third-wave, will lead to genuine intelligence.” 17

Here the pursuit of genuine intelligence tacitly means the General Artificial Intelligence comparable to that of human mind. This again returns us back to the age-old AI conundrum, which with human intelligence via a bio-chemical brain model as its logical paradigm, was and still is set up as the ultimate goal, imitating human cognition, perception, intention, while on the other hand an alternative take on the notion of intelligence which is independent of human enterprise, might well open up a more liberating frontier in AI research imagination and application. If AI (probably it then also requires another naming convention) can be viewed and measured as an autonomous and autopoietic technical reality as Gilbert Simondon had long ago advocated, or if we follow Alan Turing’s ambiguous intimation on gender and species neutrality when invoking machine intelligence, the dichotomy of us vs. AI, the imitation-induced competition and subordination between humans and AI machinery, the master / slave duality might no longer hold as a valid thesis or antithesis.

Bernard Stiegler’s articulation on Simondon has provided insights for an understanding of the reciprocity of “humanity” and “technicity.” He writes:

Interior and the exterior are consequently constituted in a movement that invents both one and the other: a moment in which they invent each other respectively, as if there were a technological maieutic of what is called humanity. The interior and the exterior are the same thing; the inside is the outside, since man (the interior) is essentially defined by the tool (the exterior). 18

Technical being as a human invention also exhibits its own autonomy. According to Brian Massumi’s elaboration on Simondon, “A technical invention does not have a historical cause, it has an ‘absolute origin’: an autonomous taking-effect of a futurity, an effective coming into existence that conditions its own potential to be a sit comes. Invention is less about cause than it is about self-conditioning emergence.” 19 This autonomous taking-effect has been made ever more present with the AI inspired computational apparatuses, increasingly manifesting the potentiality of self-organizing, self-propagating systems of autopoiesis.

Viewed in this perspective, we can imagine an AI freed from the assumed intelligence by the human measure as well as seeing AI as an agentic entity of another order, capable of a subjectivity other than that of humans, but symbiotic with humans.

Viewed this way, we can now turn to the exhibition AI Delivered: Abjection and Redemption that I mentioned at the beginning of this talk, as a case study of a different kind of AI art.

The exhibition is organized in two parts, with the first under the subtitle of The Abject.

Summarizing art since the 1970s as an outcry for The Return of the Real, the art historian Hal Foster has famously stated, the real would be the actual bodies and social sites recognized in the form of the traumatic and abject subject. He comments, “The shift in conception — from reality as an effect of representation to the real as a thing of trauma — may be definitive in contemporary art.” 20 If contemporary art is ineluctably a part of contemporary experience encroached by the pervasive presence of Artificial Intelligence, the new locality of abjection may lie precisely in where the AI’s instrumental hegemony reigns and dominates, perpetuated by capital’s greed and held in sway by political powers. But the site of abjection is also a site of resistance and creativity. The burden on AI of the excessive human desire to make it human-like is a misery awaiting to be set free – this doppelganger narrative constitutes the curatorial framework of the first part of the exhibition.

Let’s look at some works in the show. Entering the gallery, the viewers encounter the work Instinkt by Tonoptik: a glowing lamp as if it landed in a clearing. A precarious equilibrium takes place between the viewer and the lamp. Assisted by reinforcement learning algorithms, the lamp learns to develop a self-defense mechanism which shuns people too close to it and attracts them when a sense of safety is assured. The dynamics and intensity of the lamp glow, and the volume and nature of the generated sound is an insecure neural network trying hard to make sense of potential danger or play. The feeble instinct of the machine awaits empathy.

Two black boxes sit across from each other. On the right is Casey Reas and Jan St. Werner’s moving pictures titled Compressed Cinema. Trained with GANs (generative adversarial networks) frames from Ken Jacob’s 1969 film Tom, Tom, the Piper’s Son, the result is sequences of figures and objects reverberating with the soundscape. Folding in and folding out, both concretely abstract and abjectly sublime. These images are reminiscent of a Baroque beauty, incapacitating the garish wiggles of the DeepDream-induced hallucinatory visuality.

AI is often preposterous when it comes to comprehension by way of the human perception. Devin Ronneberg and Kite construct a sensorium of body movements to tune in to a plethora of intricate phantom TV land conspiracy theories, paranormal and extraterrestrial sightings, and recent news broadcasts of denial and defiance. The AI certainly is at a loss attempting to give meaning to these ravings and delirium.

Likewise, HE Zike’s E-dream: We’ll stay, forever, in this way is a real-time website, in which a supposedly intelligent program constantly adapts stories by media feeds. Information is processed into dialogues and a fragmentary story between Jack and Rose, an imaginary couple trying to have an intimate conversation. The drama played out by the machine learning algorithm which culls fragment from English news channels to pair with images harvested from Chinese websites only makes their romantic chat more ludicrous.

The second gallery presents two works. The Sense of Neoism?! is an Artificial Counter-Intelligence Machine as self-styled by Sofian Audry and Istvan Kantor (aka Monty Cantsin). The artists declare, “[T]he installation explores the spirit of revolutionary avant-garde movements in the context of today’s technological society through a counter-intelligence machine that indefinitely generates blurbs, reflections, mottos, and nonsense.” Mix and match, the propaganda apparatus is to be unapologetically exploited by plugging and unplugging network cables to artificially deconstruct and reconstruct, and to make life or cause death of the neural network. A technological substrate of wired life is witnessed as being delivered, stripped, and resurrected in the most visceral sense.

Lauren Lee McCarthy’s LAUREN is the artificial turned artful, intelligence performed absurd. In her own words, she says “I attempt to become a human version of Amazon Alexa, a smart home intelligence for people in their own homes. LAUREN is presented as a performative piece that begins with the interaction between an audience and a series of custom designed networked smart devices (including cameras, microphones, a kettle, speakers and other appliances). I remotely watch over the person residing in the cozy home-like environment and controls all aspects of it. I aim to be better than an AI because I can understand them as a person and anticipate their needs.”

The second part of the exhibition, which will be mounted in November 2021, seeks to reclaim AI as having an autopoietic dimension of world-building, a part of “the ontological in separability of intra-acting agencies,” to invoke a Karen Barad’s concept of Agential Realism, for example. Acknowledging AI as a nonhuman agency, nonetheless capable of intelligence of its own accord as technical reality, the exhibition introduces a symbiotic ecology and creative potential of the collective commons of humans and the nonhuman that is much needed to imagine a new horizon of posthuman cosmopolitics.

The works of Mexico City-based international artist collective Interspecifics is a good case study for such creative potential and critical engagement. In their project titled Codex Virtualis, Interspecifics proposes to build “a systematic collection of AI-generated hybrid organisms that will emerge due to speculative symbiotic relationships between microorganisms and algorithms.” Their intention is worth quoting in length:

Codex Virtualis is an artistic research framework oriented towards the generation of an evolving taxonomic collection of hybrid bacterial-AI organisms. We aim as a result, to encounter novel algorithmically-driven aesthetic representations, tagged with a unique morphotype and genotype like encoding, and articulated around a speculative narrative encompassing unconventional origins of life on earth and elsewhere. The project stands on the idea of cooperation to expand on the concept of intelligence by including machine and non-human agents into its (re)configuration. And intends to articulate new schemes for the social imaginary to picture life outside the planet, and to better appreciate life on earth. 21

Allochronic Cycles is another exemplary work in the second part of the exhibition AI Delivered: Redemption by the artist duo Cesar & Lois, which, according to the artists, “imagines an artificial intelligence that learns from nature’s different timescales and responds to human time as it interacts with the cosmos, planet Earth, the plant Arabidopsis and COVID.” Allochronic Cycles is a kinetic installation with synchronous and asynchronous time cycles of natural processes, ranging from the broad development of the cosmos to the evolution of life on Earth to photosynthesis and plant processes and to the fast-paced life cycles of viruses. “Embedded in this interface is an AI trained with data of atmospheric carbon emissions and which uses time forecasting to predict future atmospheric carbon levels.” 22

We find in these works investigative inquiries that cut across the comfort zone of our public imagination perpetuated by a corporate agenda in AI research and applications, opening new conceptual spaces far more expansive and precarious than the Crash Candy thrills, conjuring up new aesthetic potentials beyond the image-paradigm of AI rendition, expanding to the haptic, sensorial embodiment of machine sentience and dynamics of software, hardware and wetware entanglement as well as inter-species bricolage and reciprocity.

With these examples in mind, the anxiety implicated in the Conference question: “AI Art: Is it a challenge to human creativity?“ can be reconciled by symbiotic and co-evolutionary creativity enjoyed by humans and nonhuman AI alike, and at the same time, unpacking the potentials of a machine aesthetics that is unique to machine intelligence: a process of constant regeneration and invention, dynamic and adaptive to the environment in which it is situated and kin to the Umwelt (environment) with which it is co-evolving and becoming. Of course, this must also be premised on a new type of ethics of AI that is immune from Pattern Discrimination 23 of the algorithmic identity politics that has engendered social segregation and racial exclusion as Clement Apprich, Wendy Chun, Florian Crammer and Hito Steyerl recently critiqued, and an accountable ethics that makes open AIindustry’s intractable brands on Earth, Labor, Affect and Power, as well as on State and Space, and on Data itself as articulated by Kate Crawford in her 2020 book Atlas of AI 24, among other recent literature. Therefore, an AI realized, furthermore, will not be seen as a projection of the human subject, but unleashed from the abjection inflicted by human hubris and embarrassment, capable of subjectivity of its own order and magnitude in a cosmopolitically conscious co-existence of species and genus, machines and flesh, artificial or biological, planetary or bacterial, as some of the AI-inspired works discussed here have promised and delivered.

1. JoannaZylinska, AI Art: Machine Visions and Warped Dreams (Open Humanities Press, 2020), p. 49.

2. Ibid., p.77.

3. Martin Heidegger, Nietzsche, edit.David Farrell Krell; trans. John Stambaugh, David Farrell Krell, Frank, A.Capuzzi (New York: HarperCollins, 1991), vol. 4,p. 28.

4. Eugene Thacker, After Life (Chicago: University of Chicago Press, 2010),p. 3.

5. Brain Cantwell Smith, The Premise of Artificial Intelligence, Reckoning and Judgement (Cambridge: The MIT Press, 2019), p. 55.

6. http://cogprints.org/7150/1/10.1.1.83.5248.pdf,accessed 5/3/2022

7. https://academic.oup.com/mind/article/LIX/236/433/986238,accessed 5/3/2021

8. http://www.nyu.edu/gsas/dept/philo/courses/mindsandmachines/Papers/dennettcanmach.pdf,accessed5/3/2021

9. Nils j. Nilsson, The Quest for Artificial Intelligence (New York: Cambridge University Press, 2010), p. 53.

10. http://jmc.stanford.edu/articles/dartmouth/dartmouth.pdf,accessed 5/3/2021

11. Nilsson, The Quest for Artificial Intelligence, p. 49.

12. Cantwell Smith, The Premise of Artificial Intelligence, p. 37.

13. https://www.rand.org/content/dam/rand/pubs/papers/2006/P3244.pdf,accessed 5/3/2021

14. Cantwell Smith, The Premise of Artificial Intelligence, p. 19-20.

15. https://thenextweb.com/news/googles-new-trillion-parameter-ai-language-model-is-almost-6-times-bigger-than-gpt-3, accessed5/3/2021

16. Cantwell Smith, The Promise of Artificial Intelligence, p. xiii

17. Cantwell Smith, The Promise of Artificial Intelligence, p. xiii

18. Bernard Stiegler, Technics and Time, The Fault of Epimetheus, trans. Richard Beardsworth & GeorgeCollins (Stanford: Stanford University Press, 1998), p. 142.

19. Brian Massumi, Arne De Boever, Alex Murray and Jon Rofee, “’Technical Mentality’ Revisited: Brian Massumi on GilbertSimondon,” in Gilbert Simondon: Being and Technology, Eds., Arne De Boever, Alex Murray, Jon Rofee & Ashley Woodward (Edinburgh: Edinburgh University Press, 2013), p. 26.

20. HalFoster, The Return of the Real (Cambridge: The MIT Press, 1996), p. 146.

21. http://interspecifics.cc/work/codex-virtualis-_/ accessed 5/3/2021

22. Artist project description

23. Clement Apprich, Wendy Chun, Florian Crammer and HitoSteyerl, Pattern Discrimination (Minneapolis: University of MinnesotaPress:2019)

24. Kate Crawford, Atlas of AI (New Heaven: Yale University Press:2021)